Hi @zoltan.zvara

Short answer - it’s because you’ve set numATRs so high. The normal overhead from transactions cleanup is 17 reads/sec, for each set of ATRs. With your settings it will instead be 341 reads/sec per set.

Longer answer:

To quickly summarise transactions cleanup first (I think you already understand it, more for others coming across this thread):

- Usually transactions will complete inside the transaction.run(). However there are cases where this isn’t possible, including application crashes, so we have a cleanup process (in fact we have two, the one we’re talking about now we call “lost” or “polling” cleanup).

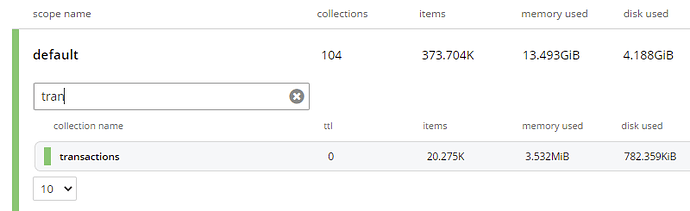

- Transactions use Active Transaction Records (ATRs). These are regular documents you can see in the UI. Each transaction attempt (a single transaction can involve multiple attempts), has an entry in an ATR. ATRs can contain multiple attempts.

- The default number of ATRs is 1,024, and by default you’ll get one set per bucket. You can also use the metadaCollection() config param to choose where they go, and customise the number with numATRs(). (I see you’re using both.)

- Polling cleanup works by polling each ATR over the configured cleanupWindow(). It is performed automatically by the Transactions object as long as cleanupLostAttempts() is true (it is by default). By default it will look in the default location on each bucket - but if metadataCollection() is used, it will only look there.

- Polling cleanup uses a lightweight communication protocol involving a doc “_txn:client-record”, that shares ATRs between all Transactions objects looking at that bucket or collection. E.g. if you scale to 100 applications the polling overhead does not scale up with it.

The polling overhead itself is easy to calculate. Each set of ATRs is going to get fully polled, once every cleanupWindow. With default settings, that’s (1,024 ATRs * N / cleanupWindow), where N is how many sets of ATRs you have. So with transactions on a single bucket and default settings, it would be 1024 * 1 / 60 = 17 reads per second, for the polling. If any expired/lost transactions are found during this time, there are additional writes to fix those of course, but this will be rare in usual operation.

We feel 17 reads per second per ATR collection is not excessive overhead, and it’s part of why we’ve used the default settings of 1,024 ATRs and a 60 second cleanupWindow, which could be considered conservative. Polling is all reads, which are much cheaper than writes.

In your case, I see you’ve set the numATRs to 20x the default - 20,480 - and this is basically why you’re seeing so many reads. You now have 20,480 * N / 60 = 341 * N read ops per second.

We’ve provided the option to configure the number of ATRs for users that need to really push the transactional throughput: theoretically the ATRs could become a bottleneck at some point, though in practice, we have not yet seen this to be the case. We believe the default of 1,024 will work fine for the vast majority of users, and this value keeps the polling overhead down. If you don’t have multiple transactions trying to write to the same ATR concurrently, then you’ll see zero benefit from increasing it. One way to measure this is to check the transaction logs - you’ll see how long each operation is taking, and see if the ATR writes take substantially longer on average than other mutations. You can also use the OpenTelemetry functionality recently added for this.

To answer your other questions:

In my idea, transaction records would stay at around 0 during proper clean up

Yes, with recent optimisations to the protocol, you should see the ATRs stay pretty small the majority of the time. Unless you’re really pushing the throughput, I’d expect them to be nearly empty. (The optimisation is that the final stage of the protocol now removes the ATR entry immediately, rather than this happening a little later.) E.g. there is usually nothing for either of the two cleanup processes to do, now.

I could not find if the number of ATRs affects the cleanup-stress in any way on the server.

Hopefully that is now answered? I will add something to the documentation on this.