Hi @tommie,

all my changes are applied correctly on the first time. The steps to reproduce this issue are:

- Apply the config with the LDAP settings → works just fine

→ kubectl describe cbc cb-cluster also gives back the correct config

- Remove one of the pods to provocate reconciliation by the operator

→ kubectl describe cbc cb-cluster still gives back the correct config

→ Operator tries to update the status and gives the error, that it could not accomplish that task due to

the validation error on tlsSecret

An an extract of the operator logs is attached:

{"level":"info","ts":1652426735.2141702,"logger":"cluster","msg":"Resource updated","cluster":"default/cb-cluster","diff":" (\n \t\"\"\"\n \tauthenticationEnabled: true\n \tauthorizationEnabled: true\n \tbindDN: SOME BIND DN\n- \tcacert: |-\n- \t SOME CERTIFICATE HERE\n+ \tcacert: \"\"\n \t... // 11 identical lines\n \t\"\"\"\n )\n"}

{"level":"info","ts":1652426745.4501116,"logger":"cluster","msg":"Resource updated","cluster":"default/cb-kcluster","diff":" (\n \t\"\"\"\n \tauthenticationEnabled: true\n \tauthorizationEnabled: true\n \tbindDN: SOME BIND DN\n- \tcacert: |-\n- \t SOME CERTIFICATE HERE\n+ \tcacert: \"\"\n \t... // 11 identical lines\n \t\"\"\"\n )\n"}

---> everything works, I can log in via LDAP. Now I kill the pod

{"level":"info","ts":1652426760.0685852,"logger":"cluster","msg":"Resource updated","cluster":"default/cb-cluster","diff":" (\n \t\"\"\"\n \t... // 100 identical lines\n \t ready:\n \t - cb-cluster-0019\n+ \t unready:\n \t - cb-cluster-0020\n \tsize: 2\n \t... // 9 identical lines\n \t\"\"\"\n )\n"}

{"level":"info","ts":1652426760.3767927,"logger":"cluster","msg":"unable to update status","cluster":"default/cb-cluster","error":"admission webhook \"couchbase-operator-admission.default.svc\" denied the request: validation failure list:\nspec.security.ldap.tlsSecret in body is required\nresource name may not be empty"}

{"level":"info","ts":1652426760.3961055,"logger":"cluster","msg":"Resource updated","cluster":"default/cb-cluster","diff":" (\n \t\"\"\"\n \t... // 100 identical lines\n \t ready:\n \t - cb-cluster-0019\n+ \t unready:\n \t - cb-cluster-0020\n \tsize: 2\n \t... // 9 identical lines\n \t\"\"\"\n )\n"}

{"level":"info","ts":1652426760.55743,"logger":"cluster","msg":"failed to update cluster status","cluster":"default/cb-cluster"}

{"level":"info","ts":1652426760.5634825,"logger":"cluster","msg":"Cluster status","cluster":"default/cb-cluster","balance":"balanced","rebalancing":false}

{"level":"info","ts":1652426760.5635242,"logger":"cluster","msg":"Node status","cluster":"default/cb-cluster","name":"cb-cluster-0019","version":"enterprise-7.0.3","class":"db2","managed":true,"status":"Active"}

{"level":"info","ts":1652426760.5635295,"logger":"cluster","msg":"Node status","cluster":"default/cb-cluster","name":"cb-cluster-0020","version":"enterprise-7.0.3","class":"db2","managed":true,"status":"Down"}

In addition, this is my complete LDAP configuration:

ldap:

authenticationEnabled: true

authorizationEnabled: true

hosts:

- < SOME HOST >

port: 636

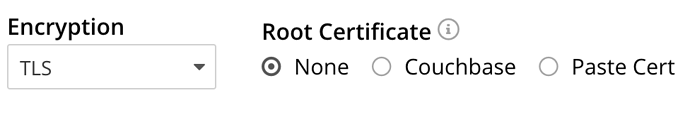

encryption: TLS

serverCertValidation: false

bindDN: < SOME BIND DN >

bindSecret: ldap-secret

userDNMapping:

query: < SOME QUERY >

groupsQuery: < SOME QUERY >

The problem is, that I cannot use it in production as is since the cluster doesn’t get healthy again if something happens.