Hello Everyone,

I’m running some test apps I have built, and I’m just trying to ascertain if this is normal behaviour or not.

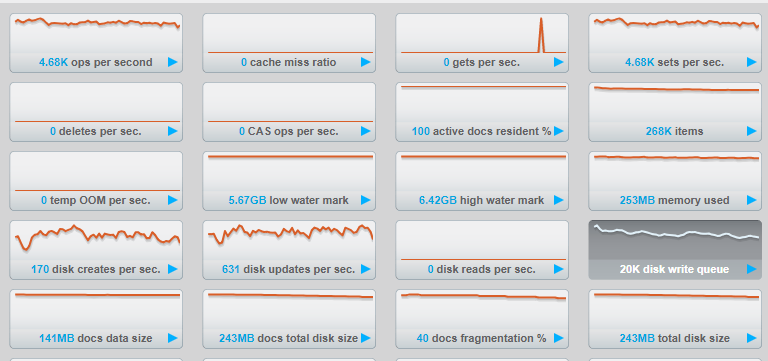

I have a high frequency app, that I have currently stunted to about 5k ops/second

Everytime I call Upsert on the documents generated by this app, my CPU AND Disk go to 100% usage.

I am running : 4.6.2-3905 enterprise version with the latest CouchbaseNetClient

Both systems are running as single nodes, not a cluster.

My Hard Drives are both SSD.

One is a DELL XPS, m.2 1TB Drive. This machine has a 4c/8t i7 6700hq chip @ 2.6 ghz with turbo up to 3.5ghz and 32gb ddr4 RAM

The other machine is slightly older, but still a respectable i7 980x 6c/12t CPU, with an Samsung 850 PRO SSD, with 24gb RAM.

I believe both of these machines should be well and truly capable of handling 5k/second Upserts.

When I comment out the lines of code that do the Upserts, my CPU usage remains virtually unchanged to when the app was started, even though it is still doing a fair bit of logic work.

When I add the lines back in, then the CPU/Disk usage surges to 100% and PC becomes unusable.

So I uninstalled Couchbase on the XPS and reinstalled it as a Memcached server only. As expected, the Disk now stays at a very low rate of 1% but the CPU is still 100%. Again, comment the lines out of the Upsert and CPU usage goes right down.

Although I have been playing around with couchbase for a bit, I must admit I’m no expert and there is a chance I have missed something extremely basic in the config/setup that needed to be done.

I have even separated out the Serialisation to outside the Upsert function, and passed Upsert itself a raw string, thinking it may be the serialisation overhead, but that wasn’t the case at all. The objects are very small.

Looking for advice and guidance.

Regards,

Marek

Just another Edit (hacked this together, and models changed due to confidentiality)

{“Position”:10,“Type”:0,“Type2”:5,“Value”:0.0,“Type3”:1,“Place”:“Bla”,“Type”:“Ex”,“Id”:“11111”}

public class RootObject

{

public int Position { get; set; }

public MyFirstType Type { get; set; }

public MySecondType Type2 { get; set; }

public double Value { get; set; }

public MyThirdType Type3 { get; set; }

public string Location { get; set; }

public string Id { get; set; }

}

public enum MyFirstType

{

A,

B

}

public enum MySecondType

{

C,

D,

E,

F,

G,

H

}

public enum MyThirdType

{

I,

J

}

public class CouchBaseInstanceTest

{

private Cluster CouchClient;

private IBucket Bucket;

public CouchBaseInstanceTest()

{

CouchClient = new Cluster(new ClientConfiguration

{

Servers = new List<Uri> {new Uri("http://localhost:8091")}

});

// If required

//CouchClient.Authenticate("admin", "password");

Bucket = CouchClient.OpenBucket("default");

}

public bool Upsert(string key, dynamic value, double expiry)

{

var Document = new Document<dynamic>()

{

Id = key,

Content = value,

Expiry = TimeSpan.FromHours(expiry).ToTtl()

};

return Bucket.Upsert(Document).Success;

}

}

To be honest though, the code here is really irrelevant I think. When I replaced the document values with a blank string “” - The problem was still the same

)

)